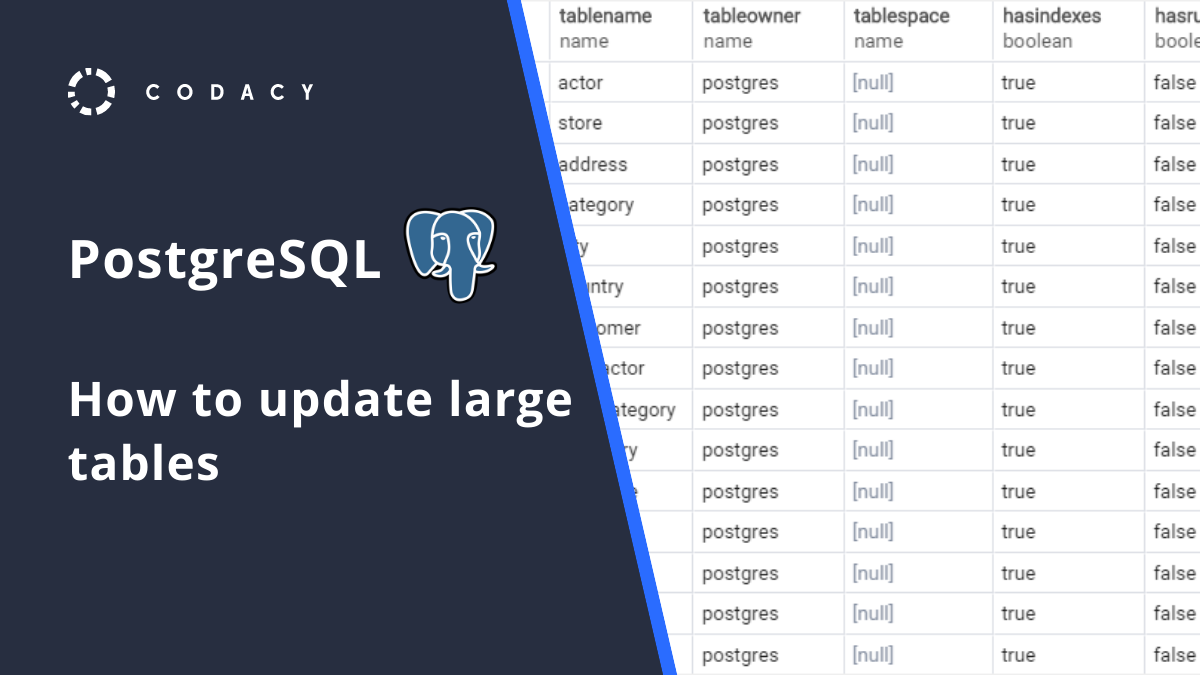

PostgreSQL: How to update large tables

Updating a large table in PostgreSQL, an advanced open-source database management system, is not straightforward. If you have a table with hundreds of millions of rows you will find that simple operations, such as adding a column or changing a column type, are hard to do in a timely manner.

Doing these kind of operations without downtime is an even harder challenge. In this blog post I will try to outline guidelines and strategies to minimize the impact in table availability while managing large data sets.

General Guidelines For PostgreSQL Table Updates

When you update a value in a column, Postgres writes a whole new row in the disk, deprecates the old row and then proceeds to update all indexes. This process is equivalent to an INSERT plus a DELETE for each row which takes a considerable amount of resources.

Besides this, here is a list of things that you should know when you need to update large tables:

- It is faster to create a new table from scratch than to update every single row. Sequential writes are faster than sparse updates. You also don’t get dead rows at the end.

- Table constraints and indexes heavily delay every write. If possible, you should drop all the indexes, triggers and foreign keys while the update runs and recreate them at the end.

- Adding a nullable column without a default value is a cheap operation. Writing the actual data of the column is the expensive part.

- Data stored in TOAST is not rewritten when the row is updated

- Converting between some data types does not require a full table rewrite since Postgres 9.2. Ex: conversion from VARCHAR(32) to VARCHAR(64).

Strategies To Update Tables In PostgresSQL

With this in mind, let’s look at a few strategies that you can use to effectively update a large number of rows in your table in PostgreSQL:

1. Incremental updates

If you can segment your data using, for example, sequential IDs, you can update rows incrementally in batches. This maximizes your table availability since you only need to keep locks for a short period of time. When adding a new column, you can temporarily set it as nullable then gradually fill it with new values.

The main problem with this approach is the performance. It is a very slow process because in place updates are costly. It may also require more complex application logic during the migration.

2. Create a new table

The fastest way to update a large table is to create a new one.

If you can safely drop the existing table and if there is enough disk space. Then, the easiest way to perform the update is to insert the data into a new table and rename it afterwards. Here is a script with the base structure for this operation:

CREATE TABLE new_tbl ( field1 int, field2 int, ... );

INSERT INTO new_tbl(field1, field2, ...) ( SELECT FROM ... -- use your new logic here to insert the updated data )

CREATE INDEX -- add your constraints and indexes to new_tbl

DROP TABLE tbl;

ALTER TABLE tbl_new RENAME TO tbl;

3. Recreate the existing table

If you can’t drop the original table because you don’t want to recreate views or there are other unique constraints, then you can use a temporary table to hold the new values, truncate the old table and rewrite the data there. This method has some advantages when you have pending write requests. We will demonstrate this in the next section.

If your table can fit in memory you should increase the temp_buffers during this transaction. Using RAM instead of the disk to store the temporary table will obviously improve the performance:

SET temp_buffers = 3000MB; — change this value accordingly

-- create and populate the temporary table CREATE TEMP TABLE tmp_tbl ( field1 int, field2 int, ... );

INSERT INTO tmp_tbl(field1, field2, ...) ( SELECT FROM ... )

-- DROP indexes from tbl if needed

TRUNCATE tbl;

-- alter tbl and add new columns if needed

INSERT INTO tbl SELECT * FROM tmp_tbl; -- insert the rows back

-- recreate all indexes if needed

3. Handling Concurrent Writes

Even with the aforementioned optimizations, recreating your table in PostgreSQL is a slow operation. If you are running the queries in a live database you may need to handle concurrent write requests.

The easiest way to do this is to force a SHARE LOCK on the table during the transaction:

LOCK TABLE tbl IN SHARE MODE;

All the write requests will wait until the lock is released or timeout if it takes too long. The requests that did not timeout will be executed once the transaction ends if the original parent table was not dropped. Note that, even if you create a new table with the same name the requests will still fail because they use the table OID.

Depending on the nature of your write requests you can also create custom rules to store changes made. For example, you can set a rule to record the deleted rows before you start the data migration:

CREATE RULE deleted_rule AS ON DELETE TO tbl DO INSERT INTO tbl_deletes VALUES ( OLD.id );

When the migration ends, you just have to read the IDs from tbl_deletes and delete them on the new table. A similar method can be used to handle other types of requests.

Conclusion

Once you reach a certain size, operations that were once instantaneous can take several hours to prepare and execute. At Codacy we receive thousands of write requests every minute and we manage hundreds of gigabytes of data. Developing new features and improving the database performance without hurting availability is a challenge that we try to solve every day. This article contains some of the things we learned while dealing with these problems.

The Postgresql documentation and some stack exchange answers have more in depth information about some topics mentioned here and are worth checking if you need more details on PostgreSQL.

Feel free to ask questions and make suggestions in the comment section, we love to learn new and better ways of doing things while working at scale.

-4.png?width=974&height=403&name=image%20(1)-4.png)